research page backup

———————— COLLISION DETECTION ————————

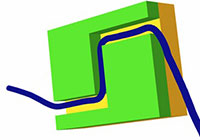

Efficient Local Planning Using Connection Collision Query

Min Tang, Young J. Kim, and Dinesh Manocha

We introduce a proximity query, connection collision query (CCQ), and use it for efficient and exact local planning in sampling-based motion planners. Given two collision-free configurations, CCQ checks whether these configurations can be connected by a given continuous path that either lies completely in the free space or penetrates any obstacle by a given threshold. Our approach is general, robust, and can handle different continuous path formulations. We have integrated the CCQ algorithm with sampling-based motion planners and can perform reliable local planning queries with little performance degradation, as compared to prior methods. Moreover, the CCQ-based exact local planner is about an order of magnitude faster than prior exact local planning algorithms.

Multi-core Collision Detection Between Deformable Models Using Front-based Decomposition

Min Tang and Dinesh Manocha

We present a parallel algorithm for fast continuous collision detection (CCD) between deformable models using multi-core processors. We use a hierarchical representation to accelerate these queries and present an incremental algorithm that exploits temporal coherence between successive frames. Our formulation distributes the computation among multiple cores by using fine-grained front-based decomposition. We also present efficient techniques to reduce the number of elementary tests and analyze the scalability of our approach. We have implemented the parallel algorithm on eight-core and sixteen-core PCs, and observe up to 7x and 13x speedups respectively, on complex benchmarks.

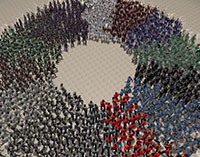

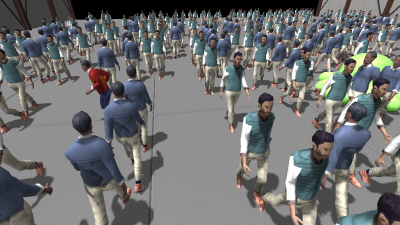

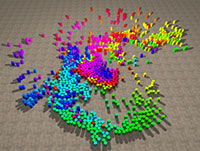

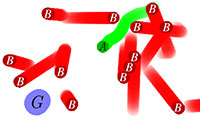

Highly Parallel Collision Avoidance for Multi-agent Simulation

Stephen J. Guy, Ming C. Lin, and Dinesh Manocha

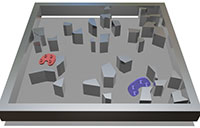

We present the ClearPath collision avoidance algorithm between multiple agents for real-time simulations. ClearPath extends the velocity obstacle (VO) from robotics and formulates the conditions for collision-free navigation as a quadratic optimization problem. We use a discrete optimization method to efficiently compute the motion of each agent and parallelize the algorithm by exploiting data and thread-level parallelism. ClearPath can robustly handle dense scenarios with hundreds of thousands of heterogeneous agents in a few milliseconds.

Fast Collision Detection for Deformable Models Using Representative-triangles

Sean Curtis and Dinesh Manocha

We present a new approach to accelerate collision detection for deformable models. Our formulation applies to all triangulated models and significantly reduces the number of elementary tests between features of the mesh, i.e., vertices, edges and faces. We introduce the notion of representative-triangles, standard geometric triangles augmented with mesh feature information and use this representation to achieve better collision query performance. The resulting approach can be combined with bounding volume hierarchies and works well for both inter-object and self-collision detection. We demonstrate the benefit of representative-triangles on continuous collision detection for cloth simulation and N-body collision scenarios. We observe up to a one-order of magnitude reduction in feature-pair tests and up to a 5x improvement in query time.

A Fast and Practical Algorithm for Generalized Penetration Depth Computation

Liangjun Zhang, Young J. Kim, and Dinesh Manocha

We present an efficient algorithm to compute the generalized penetration depth between rigid models. Given two overlapping objects, our algorithm attempts to compute the minimal translational and rotational motion that separates the two objects. We formulate the generalized penetration depth computation based on model-dependent distance metrics using displacement vectors. As a result, our formulation is independent of the choice of inertial and body-fixed reference frames, as well as specific representation of the configuration space. Furthermore, we show that the optimum answer lies on the boundary of the contact space and pose the computation as a constrained optimization problem. We use global approaches to find an initial guess and present efficient techniques to compute a local approximation of the contact space for iterative refinement.

Interactive Continuous Collision Detection Between Deformable Models Using Connectivity-based Culling

Min Tang, Sean Curtis, Sung-Eui Yoon, and Dinesh Manocha

We present an interactive algorithm for continuous collision detection between deformable models. We introduce two techniques to improve the culling efficiency and reduce the number of potentially colliding triangle candidate pairs. First, we present a novel formulation for continuous normal cones and use these normal cones to efficiently cull large regions of the mesh from self-collision tests. Second, we exploit the mesh connectivity and introduce the concept of orphan sets to eliminate almost all redundant elementary tests between adjacent triangles. In particular, we can reduce the number of elementary tests by many orders of magnitude. These culling techniques have been combined with bounding volume hierarchies and can result in one order of magnitude performance improvement as compared to prior algorithms for deformable models. We highlight the performance of our algorithm on several benchmarks, including cloth simulations, N-body simulations and breaking objects.

Interactive Collision Detection Between Deformable Models Using Chromatic Decomposition

Naga K. Govindaraju, Ilknur Kabul, Russell Gayle, Ming C. Lin, and Dinesh Manocha

We present an algorithm for accurately detecting all contacts, including self-collisions, between deformable models. We precompute a chromatic decomposition of a mesh into non-adjacent primitives using graph coloring algorithms. The chromatic decomposition enables us to check for collisions between non-adjacent primitives using a linear-time culling algorithm. As a result, we achieve higher culling efficiency and significantly reduce the number of false positives. We use our algorithm to check for collisions among complex deformable models consisting of tens of thousands of triangles for cloth modeling and medical simulation.

Efficient Inter- and Intra-object Collision Culling Using Graphics Hardware

Naga K. Govindaraju, Ming C. Lin, and Dinesh Manocha

We present a fast collision culling algorithm, QUICK-CULLIDE, for performing inter- and intra-object collision detection among complex models using graphics hardware. Our algorithm is based on CULLIDE and performs visibility queries on the GPUs to eliminate a subset of geometric primitives that are not in close proximity. We present an extension to CULLIDE to perform intra-object or self-collisions between complex models. Furthermore, we use a novel visibility-based classification scheme to compute potentially-colliding and collision-free subsets of objects and primitives that considerably improves the culling performance.

Fast and Reliable Collision Culling Using Graphics Processors

Naga K. Govindaraju, Ming C. Lin, and Dinesh Manocha

We present a reliable culling algorithm, R-CULLIDE, that enables fast and accurate collision detection between triangulated models in a complex environment. Our algorithm performs fast visibility queries on the GPUs to eliminate a subset of primitives that are not in close proximity. To overcome the accuracy problems caused by the limited viewport resolution, we compute the Minkowski sum of each primitive with a sphere and perform reliable 2.5D overlap tests between the primitives. We are able to achieve more effective collision culling as compared to prior object-space culling algorithms. Our algorithm can perform reliable GPU-based collision queries at interactive rates on all types of models, including non-manifold geometry, deformable models, and breaking objects.

Fast Collision Detection Between Massive Models Using Dynamic Simplification

Sung-Eui Yoon, Brian Salomon, Ming C. Lin, and Dinesh Manocha

We present an approach for collision detection between large models composed of tens of millions of polygons. Each model is represented as a clustered hierarchy of progressive meshes (CHPM). CHPM is a dual hierarchy of the original model; it serves both as a multi-resolution representation of the original model, as well as a bounding volume hierarchy. We use the cluster hierarchy of a CHPM to perform coarse-grained selective refinement and the progressive meshes for fine-grained local refinement. We present a novel conservative error metric to perform collision queries based on the multi-resolution representation. We use this error metric to perform dynamic simplification for collision detection. Our approach is conservative in that it may overestimate the set of colliding triangles, but never misses collisions. Furthermore, we are able to generate these hierarchies and perform collision queries using out-of-core techniques on all triangulated models.

Fast Continuous Collision Detection for Articulated Models

Stephane Redon, Young J. Kim, Ming C. Lin, and Dinesh Manocha

We present an algorithm to perform continuous collision detection for articulated models. Given two discrete configurations of the links of an articulated model, we use an arbitrary in-between motion to interpolate its motion between two successive time steps and check the resulting trajectory for collisions. Our approach uses a three-stage pipeline: dynamic bounding-volume hierarchy (D-BVH) culling based on interval arithmetic; culling refinement using the swept volume of line swept sphere (LSS) and graphics hardware accelerated queries; exact contact computation using OBB-trees and continuous collision detection between triangular primitives. The overall algorithm computes the time of collision, contact locations and prevents any interpenetration between the articulated model with the environment.

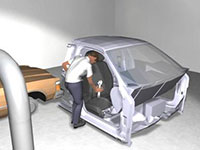

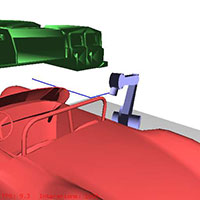

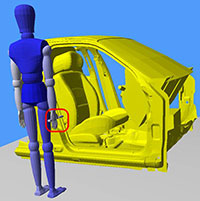

Interactive and Continuous Collision Detection for Avatars in Virtual Environments

Stephane Redon, Young J. Kim, Ming C. Lin, and Dinesh Manocha

![]()

We present an algorithm for continuous collision detection between a moving avatar and its surrounding virtual environment. We model the avatar as an articulated body using line-skeletons with constant o sets and the virtual environment as a collection of polygonized objects. Given the position and orientation of the avatar at discrete time steps, we use an arbitrary in-between motion to interpolate the path for each link between discrete instances. We bound the swept-space of each link using a swept volume (SV) and compute a bounding volume hierarchy to cull away links that are not in close proximity to the objects in the virtual environment. We generate the SVs of the remaining links and use them to check for possible interferences and estimate the time of collision between the surface of the SV and the objects in the virtual environment. Furthermore, we use graphics hardware to perform the collision queries on the dynamically generated swept surfaces. Our overall algorithm requires no pre-computation and is applicable to general articulated bodies.

Fast Update of OBB-trees for Collision Detection Between Articulated Bodies

Harald Schmidl and Ming C. Lin

Our work presents a new, faster oriented bounding box (OBB) algorithm which is especially helpful and beneficial for building box hierarchies for articulated, human-like figures. One of the largest critiques of using OBBs is that they can be very slow to update. Our main contribution lies in the fast update of the box hierarchy when the joints in the articulation hierarchy are updated. Our method provides a comparable fit to other methods of calculating loose fitting OBBs but with considerably faster speed.

Dual Hierarchies for Multi-resolution Collision Detection

Miguel A. Otaduy and Ming C. Lin

We present contact levels of detail (CLOD), a framework for multi-resolution collision detection. Given a polyhedral model, our algorithm automatically builds a dual hierarchy, both a multi-resolution representation of the original model and its bounding volume hierarchy for accelerating collision queries. We have proposed various error metrics, including object-space errors, velocity dependent gap, screen-space errors and their combinations. At runtime, our algorithm uses these error metrics to select the appropriate levels of detail independently at each potential contact location. Compared to the existing exact collision detection algorithms, we observe significant performance improvement using CLODs on some benchmarks, with little degradation in the visual rendering of simulations.

Fast Penetration Depth Computation Using Rasterization Hardware and Hierarchical Refinement

Young J. Kim, Miguel A. Otaduy, Ming C. Lin, and Dinesh Manocha

We present an algorithm to compute penetration depth (PD) between two polyhedral models. Given two overlapping polyhedra, it computes the minimal translation distance to separate them using a combination of object-space and image-space techniques. The algorithm computes pairwise Minkowski sums of decomposed convex pieces, performs closest-point query using rasterization hardware and refines the estimated PD by object-space walking. It uses bounding volume hierarchies, model simplification, object-space, and image-space culling algorithms to further accelerate the computation and refines the estimated PD in a hierarchical manner.

Fast Penetration Depth Estimation for Elastic Bodies Using Deformed Distance Fields

Susan M. Fisher and Ming C. Lin

We present a penetration depth estimation algorithm between deformable polyhedral objects. We assume the continuum of non-rigid models are discretized using standard techniques, such as finite element or finite difference methods. As the objects deform, the distance fields are deformed accordingly to estimate penetration depth, allowing enforcement of non-penetration constraints be- tween two colliding elastic bodies. This approach can automatically handle self-penetration and inter-penetration in a uniform manner.

————————– CROWD SIMULATION ————————

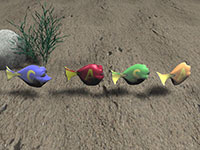

Biologically-Inspired Visual Simulation of Insect Swarms

Weizi (Philip) Li, David Wolinski, Julien Pettré, and Ming C. Lin

Representing the majority of living animals, insects are the most ubiquitous biological organisms on Earth. Being able to simulate insect swarms could enhance visual realism of various graphical applications. However, the very complex nature of insect behaviors makes its simulation a challenging computational problem. To address this, we present a general biologically-inspired framework for visual simulation of insect swarms. Our approach is inspired by the observation that insects exhibit emergent behaviors at various scales in nature. At the low level, our framework automatically selects and configures the most suitable steering algorithm for the local collision avoidance task. At the intermediate level, it processes insect trajectories into piecewise-linear segments and constructs probability distribution functions for sampling waypoints. These waypoints are then evaluated by the Metropolis-Hastings algorithm to preserve global structures of insect swarms at the high level. With this biologically inspired, data-driven approach, we are able to simulate insect behaviors at different scales and we evaluate our simulation using both qualitative and quantitative metrics. Furthermore, as insect data could be difficult to acquire, our framework can be adopted as a computer-assisted animation tool to interpret sketch-like input as user control and generate simulations of complex insect swarming phenomena.

Interactive Simulation of Dynamic Crowd Behaviors Using General Adaptation Syndrome Theory

Sujeong Kim, Stephen J. Guy, Dinesh Manocha, and Ming C. Lin

We propose a new technique to simulate dynamic patterns of crowd behaviors using the General Adaptation Syndrome model. Our model accounts for permanent, stable disposition and the dynamic nature of human behaviors that change in response to the situation. The resulting approach accounts for changes in behavior as a response to external stressors, based on well-known theories in psychology. We combine this model with recent techniques on personality modeling for multi-agent simulations to capture a wide variety of behavioral changes and stressors. The overall formulation allows different stressors, expressed as functions of space and time, including time pressure, positional stressors, area stressors and inter-personal stressors. This model can be used to simulate dynamic crowd behaviors at interactive rates, including walking with variable speeds, breaking lane-formation over time and cutting through a normal flow. We also perform qualitative and quantitative comparisons between our simulation results and real-world observations.

Simulating Heterogeneous Crowd Behaviors Using Personality Trait Theory

Stephen J. Guy, Sujeong Kim, Ming C. Lin, and Dinesh Manocha

We present a new technique to generate heterogeneous crowd behaviors using personality trait theory. Our formulation is based on adopting results of a user study to derive a mapping from crowd simulation parameters to the perceived behaviors of agents in computer-generated crowd simulations. We also derive a linear mapping between simulation parameters and personality descriptors corresponding to the well-established Eysenck three-factor personality model. Furthermore, we propose a novel two-dimensional factorization of perceived personality in crowds based on a statistical analysis of the user study results. Finally, we demonstrate that our mappings and factorizations can be used to generate heterogeneous crowd behaviors in different settings.

A Least-effort Approach to Crowd Simulation

Stephen J. Guy, Sean Curtis, Ming C. Lin, and Dinesh Manocha

We present an algorithm for simulating large-scale crowds at interactive rates based on the principle of least effort (PLE). Our approach uses an optimization method to compute a biomechanically energy-efficient, collision-free trajectory that minimizes the amount of effort for each heterogeneous agent in a large crowd. Moreover, the algorithm can automatically generate many emergent phenomena such as lane formation, crowd compression, edge and wake effects ant others. We compare the results from our simulations to data collected from prior studies in pedestrian and crowd dynamics, and provide visual comparisons with real-world video. In practice, our approach can interactively simulate large crowds with thousands of agents on a desktop PC and naturally generates a diverse set of emergent behaviors.

Synthesizing Human Motion in Constrained Environments

Jia Pan, Liangjun Zhang, Ming C. Lin, and Dinesh Manocha

We present an algorithm to synthesize plausible motions for high-degree-of-freedom human-like articulated figures in constrained environments with multiple obstacles. Our approach is general and makes no assumptions about the articulated model or the environment. Furthermore, we use path perturbation and replanning techniques to satisfy the kinematic and dynamic constraints on the motion. In order to generate realistic human-like motion, we introduce a motion blending algorithm that refines the path computed by the planner with motion capture data to compute a smooth and plausible trajectory.

Modeling Collision Avoidance Behavior for Virtual Humans

Stephen J. Guy, Ming C. Lin, and Dinesh Manocha

We present RCAP, a new trajectory planning algorithm for virtual humans. Our approach focuses on implicit cooperation between multiple virtual agents in order to share the work of avoiding collisions with each other. Specifically, we extend recent work on multi-robot planning to better model how humans avoid collisions by introducing new parameters that model human traits, such as reaction time and biomechanical limitations. We validate this new model based on data of real humans walking captured by the Locanthrope project. We also show how our model extends to complex scenarios with multiple agents interacting with each other and avoiding nearby obstacles.

Aggregate Dynamics for Dense Crowd Simulation

Rahul Narain, Abhinav Golas, Sean Curtis, and Ming C. Lin

We present a novel, scalable approach for simulating such crowds, using a dual representation both as discrete agents and as a single continuous system. In the continuous setting, we introduce a novel variational constraint called unilateral incompressibility, to model the large-scale behavior of the crowd, and accelerate inter-agent collision avoidance in dense scenarios.

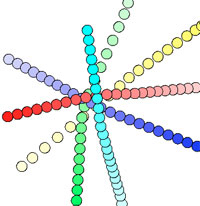

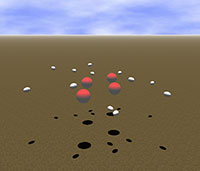

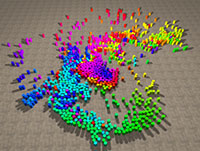

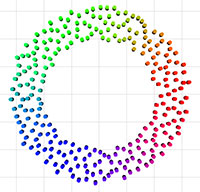

Optimal Reciprocal Collision Avoidance

Jur van den Berg, Stephen J. Guy, Jamie Snape, Ming C. Lin, and Dinesh Manocha

We present a formal approach to reciprocal collision avoidance, where multiple independent mobile robots or agents need to avoid collisions with each other without communication among agents while moving in a common workspace. Our formulation, optimal reciprocal collision avoidance (ORCA), provides sufficient conditions for collision-free motion by letting each agent take half of the responsibility of avoiding pairwise collisions. Selecting the optimal action for each agent is reduced to solving a low-dimensional linear program, and we prove that the resulting motions are smooth. We test our ORCA approach on a team of iRobot Create differential-drive mobile robots, as well as on several dense and complex simulation scenarios in both 2D and 3D workspaces involving thousands of agents, and compute collision-free actions for all of them in only a few milliseconds.

Highly Parallel Collision Avoidance for Multi-agent Simulation

Stephen J. Guy, Ming C. Lin, and Dinesh Manocha

We present the ClearPath collision avoidance algorithm between multiple agents for real-time simulations. ClearPath extends the velocity obstacle (VO) from robotics and formulates the conditions for collision-free navigation as a quadratic optimization problem. We use a discrete optimization method to efficiently compute the motion of each agent and parallelize the algorithm by exploiting data and thread-level parallelism. ClearPath can robustly handle dense scenarios with hundreds of thousands of heterogeneous agents in a few milliseconds.

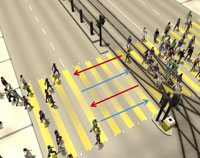

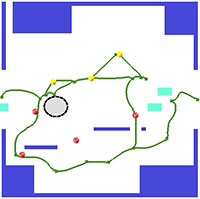

Directing Crowd Simulations Using Navigation Fields

Sachin Patil, Jur van den Berg, Sean Curtis, Ming C. Lin, and Dinesh Manocha

We present an approach based on navigation fields to direct and control virtual crowds. Our method directs agents towards their desired goals based on guidance trajectories. The system allows the user to sketch the paths directly in the scene or import motion fields extracted from video footage. The input guidance fields are blended together to form a goal-directed navigation field to direct virtual crowds.

Composite Agents

Hengchin (Yero) Yeh, Sean Curtis, Sachin Patil, Jur van den Berg, Dinesh Manocha, and Ming C. Lin

We introduce composite agents to effectively model complex agent interactions for agent-based crowd simulation. Each composite agent consists of a basic agent that is associated with one or more proxy agents. The composite agent formulation allows an agent to exercise influence over other agents greater than that implied by its physical properties. Composite agents can be added to most agent-based simulation systems and used to model emergent behaviors among individuals.

Interactive Navigation of Individual Agents in Crowded Environments

Jur van den Berg, Sachin Patil, Jason Sewall, Dinesh Manocha, and Ming C. Lin

We present an approach for interactive navigation and planning of multiple agents in crowded scenes with moving obstacles. Our formulation uses a pre-computed roadmap that provides macroscopic, global connectivity for way-finding and combines it with fast and localized navigation for each agent. At runtime, each agent senses the environment independently and computes a collision-free path based on an extended velocity obstacle (VO) and smoothness constraints. Furthermore, our algorithm ensures that each agent exhibits no oscillatory behaviors or gets trapped at a local minimum in crowded environments.

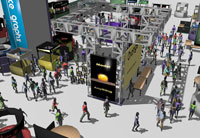

Navigating Virtual Agents in Online Virtual Worlds

Russell Gayle and Dinesh Manocha

We present an approach for navigating autonomous virtual agents in online virtual worlds that are based on a centralized server network topology. The motion of each virtual agent is controlled through local and global navigation. Our local navigation model is based on artificial social forces that has been extended to account for inaccurate sensing from network latency. Global navigation for each virtual agent is based on cell decomposition and computes high level paths. The overall computation is balanced by performing local navigation on client machines and global navigation on the server.

Real-time Navigation of Independent Agents Using Adaptive Roadmaps

Avneesh Sud, Russell Gayle, Erik Andersen, Stephen J. Guy, Ming C. Lin, and Dinesh Manocha

We present an algorithm for navigating a large number of independent agents in complex and dynamic environments. We compute adaptive roadmaps to perform global path planning for each agent simultaneously. We take into account dynamic obstacles and inter-agent interaction forces to continuously update the roadmap by using a physically-based agent dynamics simulator. We also introduce link bands for resolving collisions among multiple agents.

Real-time Path Planning for Virtual Agents in Dynamic Environments

Avneesh Sud, Erik Andersen, Sean Curtis, Ming C. Lin, and Dinesh Manocha

We present an approach for real-time path planning of multiple virtual agents in complex dynamic scenes. We introduce the multi-agent navigation graph (MaNG), which is constructed from the first- and second-order Voronoi diagrams. The MaNG is used to perform route planning and proximity computations for each agent in real time. We compute the MaNG using graphics hardware and present culling techniques to accelerate the computation.

Fundamental Diagram Adherent Interactive Crowd Simulation using Density-Dependent Filters

Sahil Narang, Andrew Best, Sean Curtis, Benjamin Crétien, and Dinesh Manocha

We present a novel algorithm to model density-dependent behaviors in crowd simulation. Our approach aims to generate pedestrian trajectories that correspond to the speed/density relationships that are typically expressed using the Fundamental Diagram. The algorithm’s formulation can be easily combined with well-known multi-agent simulation techniques that use social forces or reciprocal velocity obstacles for local navigation. Our approach results in better utilization of free space by the pedestrians and has a small computational overhead. We are able to generate human-like dense crowd behaviors in large indoor and outdoor environments; we validate our results by comparing them with captured crowd trajectories.

————————— RAY TRACING ————————

Dynamic Scene Benchmarks

The dynamic scenes used in our projects are available.

SATO: Surface-Area Traversal Order for Shadow Ray Tracing

Jae-Ho Nah and Dinesh Manocha

We present the surface-area traversal order (SATO) metric to accelerate shadow ray traversal. Our formulation uses the surface area of each child node to compute the traversal order. In this metric, we give a traversal priority to the child node with the larger surface area to quickly find occluders. Our algorithm reduces the preprocessing overhead significantly, and is much faster than other metrics. Overall, the SATO is useful for ray tracing large and complex dynamic scenes (e.g. a few million triangles) with shadows.

RayCore: RayCore: A ray-tracing hardware architecture for mobile devices

We present RayCore, a mobile ray-tracing hardware architecture. RayCore facilitates high-quality rendering effects, such as reflection, refraction, and shadows, on mobile devices by performing real-time Whitted ray tracing. RayCore consists of two major components: ray-tracing units (RTUs) based on a unified traversal and intersection pipeline and a tree-building unit (TBU) for dynamic scenes. The overall RayCore architecture offers consid- erable benefits in terms of die area, memory access, and power consump- tion. We have evaluated our architecture based on FPGA and ASIC evalua- tions and demonstrate its performance on different benchmarks. According to the results, our architecture demonstrates high performance per unit area and unit energy, making it highly suitable for use in mobile devices.

HART: A Hybrid Architecture for Ray Tracing Animated Scenes

We present a hybrid architecture, inspired by asynchronous BVH construction, for ray tracing animated scenes. Our hybrid architecture utilizes heterogeneous hardware resources: dedicated ray-tracing hardware for BVH updates and ray traversal and a CPU for BVH reconstruction. We also present a traversal scheme using a primitive’s axis-aligned bounding box (PrimAABB). This scheme reduces ray-primitive intersection tests by reusing existing BVH traversal units and the primAABB data for tree updates; it enables the use of shallow trees to reduce tree build times, tree sizes, and bus bandwidth requirements. Furthermore, we present a cache scheme that exploits consecutive memory access by reusing data in an L1 cache block. We perform cycle-accurate simulations to verify our architecture, and the simulation results indicate that the proposed architecture can achieve real-time Whitted ray tracing animated scenes at 1920×1200 resolution. This result comes from our high-performance hardware architecture and minimized resource requirements for tree updates.

Selective Ray Tracing for Interactive High-quality Shadows

Christian Lauterbach, Qi Mo, and Dinesh Manocha

We present novel algorithms to achieve interactive high-quality illumination with hard and soft shadows in complex models. Our approach combines the efficiency of rasterization-based approaches with the accuracy of a ray tracer. We use conservative image-space bounds to identify only a small subset of the pixels in the rasterized image and perform selective ray tracing on those pixels. The algorithm can handle moving light sources as well as dynamic scenes. In practice, our approach is able to generate high quality hard and soft shadows on complex models with millions of triangles at almost interactive rates on current high-end GPUs.

Fast BVH Construction on GPUs

Christian Lauterbach and Dinesh Manocha

We present two novel parallel algorithms for rapidly constructing bounding volume hierarchies on many-core GPUs. The first uses a linear ordering derived from spatial Morton codes to build hierarchies extremely quickly and with high parallel scalability. The second is a top-down approach that uses the surface area heuristic (SAH) to build hierarchies optimized for fast ray tracing. Both algorithms are combined into a hybrid algorithm that removes existing bottlenecks in the algorithm for GPU construction performance and scalability leading to significantly decreased build time. The resulting hierarchies are close in to optimized SAH hierarchies, but the construction process is substantially faster, leading to a significant net benefit when both construction and traversal cost are accounted for. Our preliminary results show that current GPU architectures can compete with CPU implementations of hierarchy construction running on multicore systems. In practice, we can construct hierarchies of models with up to several million triangles and use them for fast ray tracing or other applications.

ReduceM: Interactive and Memory Efficient Ray Tracing of Large Models

Christian Lauterbach, Sung-Eui Yoon, Ming Tang, and Dinesh Manocha

We present a novel representation and algorithm, ReduceM, for memory efficient ray tracing of large scenes. ReduceM exploits the connectivity between triangles and decomposes the model into triangle strips. We also describe a novel stripification algorithm, Strip-RT, that can generate long strips with high spatial coherence optimized for ray tracing. We use a two-level traversal algorithm for ray-primitive intersection. In practice, ReduceM can significantly reduce the storage overhead and ray trace massive models with a hundreds of millions of triangles at interactive rates on desktop PCs with 4-8GB of main memory.

AD-Frustum: Adaptive Frustum Tracing for Interactive Sound Propagation

Anish Chandak, Christian Lauterbach, Micah Taylor, Zhimin Ren, and Dinesh Manocha

We present an interactive algorithm to compute sound propagation paths for transmission, specular refection and edge diffraction in complex scenes. Our formulation uses an adaptive frustum representation that is automatically sub-divided to accurately compute intersections with the scene primitives. We describe a simple and fast algorithm to approximate the visible surface for each frustum and generate new frusta based on specular refection and edge diffraction. Our approach is applicable to all triangulated models and we demonstrate its performance on architectural and outdoor models with tens or hundreds of thousands of triangles and moving objects. In practice, our algorithm can perform geometric sound propagation in complex scenes at 4-20 frames per second on a multi-core PC.

Paper (IEEE Visualization, 2008) ![]()

Ray-strips: A Compact Mesh Representation for Interactive Ray Tracing

Christian Lauterbach, Sung-Eui Yoon, and Dinesh Manocha

We present a novel hierarchical representation, Ray-Strips, for interactive ray tracing of complex triangle meshes. Prior optimized algorithms for ray tracing explicitly store each triangle in the input model. Instead, a Ray-Strip takes advantage of mesh connectivity for compact storage, efficient traversal and ray intersections. As a result, we considerably reduce the memory overhead of the original model and the hierarchical representation. We also present efficient algorithms for single ray and ray packet traversal using Ray-Strips. Furthermore, we demonstrate an additional benefit of our representation, maintaining utilization of the SIMD capabilities of current CPUs for incoherent ray packets and single rays. We show the benefit of Ray-Strips on models with tens of thousands to tens of millions of triangles. In practice, our approach can reduce the storage overhead of interactive ray tracing algorithms by up to five times compared to standard interactive approaches while even improving runtime performance for large models.

Paper (Interactive Ray Tracing Symposium, 2007)

Ray Tracing Dynamic Scenes Using Selective Restructuring

Sung-Eui Yoon, Sean Curtis, and Dinesh Manocha

We present a novel algorithm to selectively restructure bounding volume hierarchies (BVHs) for ray tracing dynamic scenes. We derive two new metrics to evaluate the culling efficiency and restructuring benefit of any BVH. Based on these metrics, we perform selective restructuring operations that efficiently reconstruct small portions of a BVH instead of the entire BVH. Our approach is general and applicable to complex and dynamic scenes, including topological changes. We apply our selective restructuring algorithm to improve the performance of ray tracing dynamic scenes that consist of hundreds of thousands of triangles. In our benchmarks, we observe up to an order of magnitude improvement over prior BVH-based ray tracing algorithms.

Interactive Sound Propagation in Dynamic Scenes Using Frustum Tracing

Christian Lauterbach, Anish Chandak, Micah Taylor, Zhimin Ren, and Dinesh Manocha

We present a new approach for simulating real-time sound propagation in complex, virtual scenes with dynamic sources and objects. Our approach combines the efficiency of interactive ray tracing with the accuracy of tracing a volumetric representation. We use a four-sided convex frustum and perform clipping and intersection tests using ray packet tracing. A simple and efficient formulation is used to compute secondary frusta and perform hierarchical traversal. We demonstrate the performance of our algorithm in an interactive system for game-like environments and architectural models with tens or hundreds of thousands of triangles. Our algorithm can simulate and render sounds at interactive rates on a high-end PC.

R-LODs for Ray Tracing Massive Models

Sung-Eui Yoon, Christian Lauterbach, and Dinesh Manocha

We present a novel level-of-detail (LOD) algorithm to accelerate ray tracing of massive models. Our approach computes drastic simplifications of the model and integrates the LODs directly with the kd-tree data structure. We introduce a simple and efficient LOD metric to bound the error for primary and secondary rays. The LOD representation has small runtime overhead and our algorithm can be combined with ray coherence techniques and cache-coherent layouts to improve the performance. In practice, the use of LODs can alleviate aliasing artifacts and improve memory coherence. We implement our algorithm on both 32-bit and 64-bit machines and achieve up to 2-20 times improvement in frame rate while rendering models consisting of tens or hundreds of millions of triangles with little loss in image quality.

Interactive Ray Tracing of Deformable Models Using BVHs

Christian Lauterbach, Sung-Eui Yoon, David Tuft, and Dinesh Manocha

We present an efficient approach for interactive ray tracing of deformable or animated models. Unlike many of the recent approaches for ray tracing static scenes, we use bounding volume hierarchies (BVHs) instead of kd-trees as the underlying acceleration structure. Our algorithm makes no assumptions about the simulation or the motion of objects in the scene and dynamically updates or recomputes the BVHs. We also describe a method to detect BVH quality degradation during the simulation in order to determine when the hierarchy needs to be rebuilt. Furthermore, we show that the ray coherence techniques introduced for kd-trees can be naturally extended to BVHs and yield similar improvements. Our algorithm has been applied to different scenarios composed of tens of thousands to a million triangles arising in animation and simulation. In practice, our algorithm can ray trace these models at 4-20 frames a second on a dual-core Xeon PC.

Cache-efficient Layouts of Bounding Volume Hierarchies

Sung-Eui Yoon and Dinesh Manocha

We present a novel algorithm to compute cache-efficient layouts of bounding volume hierarchies (BVHs) of polygonal models. We does not make any assumptions about the cache parameters or block sizes of the memory hierarchy. We introduce a new probabilistic model to predict the runtime access patterns of a BVH. Our layout computation algorithm utilizes parent-child and spatial localities between the accessed nodes to reduce both the number of cache misses and the size of working set. Also, our algorithm works well with spatial partitioning hierarchies including kd-trees. We use our algorithm to compute layouts of BVHs and spatial partitioning hierarchies of large models composed of millions of triangles. We compare our cache-efficient layouts with other layouts in the context of collision detection and ray tracing. In our benchmarks, our layouts consistently show better performance over other layouts and improve the performance of these applications by 26-2600% without any modification of the underlying algorithms or runtime applications.

————————— MOTION PLANNING ————————

Single Robot or Agent

Efficient Local Planning Using Connection Collision Query

Min Tang, Young J. Kim, and Dinesh Manocha

We introduce a novel proximity query, connection collision query (CCQ), and use it for efficient and exact local planning in sampling-based motion planners. Given two collision-free configurations, CCQ checks whether these configurations can be connected by a given continuous path that either lies completely in the free space or penetrates any obstacle by at most a given threshold. Our approach is general, robust, and can handle different continuous path formulations. We have integrated the CCQ algorithm with sampling-based motion planners and can perform reliable local planning queries with little performance degradation, as compared to prior methods. Moreover, the CCQ-based exact local planner is about an order of magnitude faster than prior exact local planning algorithms.

Real-time Motion Planning and Global Navigation Using GPUs

Jia Pan, Christian Lauterbach, and Dinesh Manocha

We present randomized algorithms for solving global motion planning problems that exploit the computational capabilities of many-core graphics processors (GPUs). Our approach uses thread and data parallelism to achieve high performance for all components of sample-based algorithms, including random sampling, nearest neighbor computation, local planning, collision queries, and graph search. The overall approach can efficiently solve both the multi-query and single-query versions of the problem and obtain considerable speedups over prior CPU-based algorithms. This is the first algorithm that can perform real-time motion planning and global navigation using commodity hardware.

Constraint-based Motion Synthesis for Deformable Models

William Moss, Ming C. Lin, and Dinesh Manocha

We present a goal-directed motion synthesis technique that integrates sample-based planning methods with constraint-based dynamics simulation using a finite element formulation to generate collision-free paths for a deformable model. Our method allows the user to quickly specify various constraints, including a desired trajectory as a sparse sequence of waypoints, and automatically computes a physically plausible path that satisfies geometric and physical constraints.

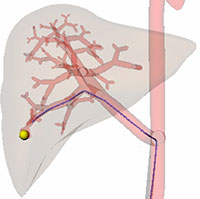

Path Computation for Part Disassembly

Liangjun Zhang, Young J. Kim, and Dinesh Manocha

We present an approach to computing a collision-free path for part disassembly simulation and virtual prototyping of part removal. Our algorithm is based on sample-based motion planning that connects collision-free samples in the configuration space using local planning. We describe techniques to generate samples in narrow passages and efficient local planning algorithms to connect them with collision-free paths.

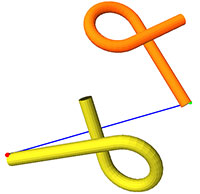

Retraction-based RRT Planner

Liangjun Zhang and Dinesh Manocha

We present a optimization-based retraction algorithm to improve the performance of sample-based planners in narrow passages for three-dimensional rigid robots. The retraction step is formulated as an optimization problem using a distance metric in the configuration space. We use local contact analysis to compute samples near the boundary of C-obstacle and use those samples to improve the performance of rapidly-exploring random tree (RRT) planners in narrow passages.

Complete Motion Planning

Liangjun Zhang, Gokul Varadhan, Young J. Kim, Shankar Krishnan, and Dinesh Manocha

We present efficient and practical algorithms for complete motion planning. A complete motion planner either computes a collision-free path from the initial configuration to the final configuration or concludes that no such path exists.

Physics-based Motion Planning

Russell Gayle, Ming C. Lin, and Dinesh Manocha

We propose a physics-based motion planning (PMP) approach to motion planning. PMP relies upon motion equations and robot control in order to find a path. The resulting path obeys kinematic and dynamics constraints while also finding a goal; something that configuration-space randomized planners cannot accomplish. Furthermore, motion equations need not be restricted to the robot. The paths or roadmaps themselves can also be controlled in a physically plausible manner to ensure a collision-free path in a dynamic environment.

Local Planning in the Contact Space

Stephane Redon and Ming C. Lin

We present a local planning method in contact-space which uses continuous collision detection (CCD). We use the precise contact information provided by a CCD algorithm to enhance a randomized planner by efficiently sampling the contact-space, as well as by constraining the sampling when the roadmap is expanded.

Constraint-based Motion Planning of Deformable Robots

Russell Gayle, Ming C. Lin, and Dinesh Manocha

We present a algorithm for motion planning of a deformable robot in a static environment. Our algorithm computes an approximate path using the probabilistic roadmap method (PRM). We use constraint-based planning to simulate robot deformation and make appropriate path adjustments and corrections to compute a collision-free path. Our PRM algorithm takes into account geometric constraints, like non-penetration, and physical constraints, like volume preservation.

Path Planning for Deformable Robots

Russell Gayle, Ming C. Lin, and Dinesh Manocha

We present an algorithm for path planning for a flexible robot in complex environments. Our algorithm computes a collision-free path by taking into account geometric and physical constraints, including obstacle avoidance, non-penetration, volume preservation, surface tension, and energy minimization.

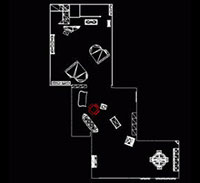

Interactive Navigation Using Path Planning

Brian Salomon, Maxim Garber, Ming C. Lin, and Dinesh Manocha

We have developed a system for navigation of massive environments using path planning. Our system provides the user with a local driving mode that keeps an avatar constrained to walkable surfaces in the model and a global path planner. Arbitrary configurations are specified by the user and a preprocessed graph is searched for a path between the configurations. The graph is generated using an algorithm based on the visibility probabilistic roadmap (PRM) technique and the runtime path following uses the local driving mode to move the user along the path.

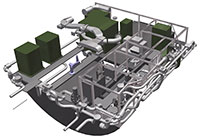

Constraint-based Motion Planning for Virtual Prototyping

Maxim Garber and Ming C. Lin

We present a framework for motion planning of rigid and articulated robots in complex, dynamic, three-dimensional environments and demonstrate its application to virtual prototyping. It is based on transforming the motion planning problem into the simulation of a dynamical system. Motion of each rigid robot is subject to virtual forces induced by all types of geometric constraints. The resulting algorithm uses all contributing forces to move the robot along the estimated path, while avoiding collision with obstacles and enforcing joint and positional constraints.

Voronoi-based Hybrid Motion Planner

Mark Foskey, Maxim Garber, Ming C. Lin, and Dinesh Manocha

We present a hybrid path planning algorithm for rigid and articulated bodies translating and rotating in three-dimensional workspace. We compute a Voronoi roadmap in the workspace from a discrete approximation to the generalized Voronoi diagram (GVD) and combine it with bridges computed by a randomized path planner with Voronoi-biased sampling.

Randomized Path Planning

Charles Pisula, Kenneth E. Hoff III, Ming C. Lin, and Dinesh Manocha

We present algorithms for sampling near the medial axis and building roadmap graphs for a free-flying rigid body. We initially compute a bounded-error discrete Voronoi diagram of the obstacles in the workspace and use it to generate samples in the free space and use multi-level connection strategies and local planning algorithms to generate roadmap graphs. We also utilize the distance information provided by our Voronoi algorithm for fast proximity queries and sampling the configurations.

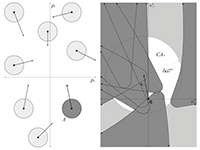

Motion Planning Using Generalized Voronoi Diagrams

Kenneth E. Hoff III, Timothy Culver, John Keyser, Ming C. Lin, and Dinesh Manocha

We present techniques for fast motion planning by using discrete approximations of the generalized Voronoi diagram (GVD) computed with graphics hardware. Approaches based on the GVD computation are applicable to both static and dynamic environments of fairly high complexity. The computation of the GVD provides fast proximity query toolkits for motion planning.

Multiple Robots or Agents

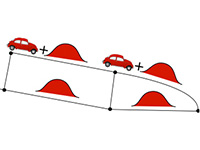

Reciprocal Collision Avoidance with Acceleration-velocity Obstacles

Jur van den Berg, Jamie Snape, Stephen J. Guy, and Dinesh Manocha

We present an approach for collision avoidance for mobile robots that takes into account acceleration constraints. We discuss both the case of navigating a single robot among moving obstacles, and the case of multiple robots reciprocally avoiding collisions with each other while navigating a common workspace. Inspired by the concept of velocity obstacles, we introduce the acceleration-velocity obstacle (AVO) to let a robot avoid collisions with moving obstacles while obeying acceleration constraints. AVO characterizes the set of new velocities the robot can safely reach and adopt using proportional control of the acceleration. We extend this concept to reciprocal collision avoidance for multi-robot settings, by letting each robot take half of the responsibility of avoiding pairwise collisions. Our formulation guarantees collision-free navigation even as the robots act independently and simultaneously, without coordination. Our approach is designed for holonomic robots, but can also be applied to kinematically constrained non-holonomic robots such as cars. We have implemented our approach, and we show simulation results in challenging environments with large numbers of robots and obstacles.

Smooth Navigation for Multiple Differential-drive Robots

Jamie Snape, Jur van den Berg, Stephen J. Guy, and Dinesh Manocha

We present a method for smooth and collision-free navigation for multiple independent robots under differential-drive constraints. Our algorithm is based on the optimal reciprocal collision avoidance (ORCA) formulation and guarantees both smoothness in the trajectories of the robots and locally collision-free paths. We provide proofs of these guarantees and demonstrate the effectiveness of our method in experimental scenarios using iRobot Create mobile robots navigating among each other.

Navigating Multiple Simple-airplanes in 3D Workspace

Jamie Snape and Dinesh Manocha

We consider the problem of collision-free navigation of multiple flying robots in 3D workspace. Our approach extends a simple car to a simple-airplane, with constraints on speed, steering angle, and change in altitude. Our algorithm uses optimal reciprocal collision avoidance (ORCA) to independently compute a local trajectory without collisions or oscillations for each simple-airplane. We consider kinematic and dynamic constraints and use variable reciprocity when choosing velocities to ensure less-constrained simple-airplanes take more responsibility for avoiding collisions.

Independent Navigation of Multiple Mobile Robots

Jamie Snape, Jur van den Berg, Stephen J. Guy, and Dinesh Manocha

We present the hybrid reciprocal velocity obstacle (HRVO) for smooth and collision-free navigation of multiple independent mobile robots amongst each other. We build on prior work related to the velocity obstacle (VO) and reciprocal velocity obstacle (RVO) concepts and introduce HRVO for collision avoidance that takes into account the kinematics of each robot and uncertainty in sensor data.

Generalized Velocity Obstacles

David Wilkie, Jur van den Berg, and Dinesh Manocha

We address the problem of real-time navigation in dynamic environments for car-like robots. The generalized velocity obstacle (GVO) adapts the velocity obstacle (VO) concept to take into account the constraints of a car-like robot. We use the GVO formulation to find controls that will allow collision-free navigation in a dynamic environment.

Optimal Reciprocal Collision Avoidance

Jur van den Berg, Stephen J. Guy, Jamie Snape, Ming C. Lin, and Dinesh Manocha

We present a formal approach to reciprocal collision avoidance, where multiple independent mobile robots or agents need to avoid collisions with each other without communication among agents while moving in a common workspace. Our formulation, optimal reciprocal collision avoidance (ORCA), provides sufficient conditions for collision-free motion by letting each agent take half of the responsibility of avoiding pairwise collisions. Selecting the optimal action for each agent is reduced to solving a low-dimensional linear program, and we prove that the resulting motions are smooth. We test our ORCA approach on a team of iRobot Create differential-drive mobile robots, as well as on several dense and complex simulation scenarios in both 2D and 3D workspaces involving thousands of agents, and compute collision-free actions for all of them in only a few milliseconds.

Coordination Using Generalized Social Potential Fields

Russell Gayle, William Moss, Ming C. Lin, and Dinesh Manocha

We present a approach to compute collision-free paths for multiple robots subject to local coordination constraints. Our approach generalizes the social potential field method to be applicable to both convex and non-convex polyhedra. Social potential fields are then integrated into a physics-based motion planning framework which uses constrained dynamics to solve the motion planning problem.

Reciprocal Velocity Obstacles

Jur van den Berg, Ming C. Lin, and Dinesh Manocha

We propose the reciprocal velocity obstacle (RVO) concept for real-time multi-agent navigation in which each agent navigates independently without explicit communication with other agents. RVO is an extension of the velocity obstacle (VO) that takes into account the reactive behavior of the other agents by implicitly assuming that they make similar collision-avoidance reasoning. RVO guarantees safe and oscillation-free motions for each of the agents.

Reactive Deforming Roadmaps

Russell Gayle, Avneesh Sud, Ming C. Lin, and Dinesh Manocha

We present a algorithm for motion planning of multiple robots amongst dynamic obstacles. The reactive deforming roadmap (RDR) uses deformable links and dynamically retracts to capture the connectivity of the free space. We use Newtonian physics and Hooke’s law to update the position of the milestones and deform the links in response to the motion of other robots and the obstacles.

Human-like Motion Planning

Synthesizing Human Motion in Constrained Environments

Jia Pan, Liangjun Zhang, Ming C. Lin, and Dinesh Manocha

We present an algorithm to synthesize plausible motions for high-degree-of-freedom human-like articulated figures in constrained environments with multiple obstacles. Our approach is general and makes no assumptions about the articulated model or the environment.

Motion Planning of Human-like Robots

Liangjun Zhang, Jia Pan, and Dinesh Manocha

We present a whole-body motion planning algorithm for human-like robots. The planning problem is decomposed into a sequence of low-dimensional subproblems. Our formulation is based on the fact that a human-like model is a tightly-coupled system and uses a constrained coordination scheme to solve the subproblems in an incremental manner.

—————————– TRAFFIC —————————-

Road Network Library

David Wilkie, Jason Sewall, Weizi Li, and Ming C. Lin

With growing interest in adapting simulation technology for the analysis, planning, and design of new transportation infrastructures and traffic management systems, efficient and accurate creation of 3D models of complex road networks using commonly available GIS data consisting of polylines is needed for realistic, large-scale urban traffic simulation. Road Network is a library consisted of efficient, automatic methods for extrapolating a road map from a GIS database to automatically create a geometrically correct and topologically consistent 3D model of large-scale road network to be readily used in a real-time traffic simulation, interactive visualization of virtual world, and autonomous vehicle navigation. The resulting model representation also provides important road features for traffic simulations, including smoothly connected ramps, highways, overpasses, legal merge zones, and intersections with arbitrary states and is independent of the simulation methodologies.

Project Website

Participatory Route Planning

David Wilkie, Cenk Baykal, and Ming C. Lin

We present an approach to “participatory route planning”, a novel concept that takes advantage of mobile devices, such as cellular phones or embedded systems in cars, to form an interactive, participatory network of vehicles that plan their travel routes based on the current traffic conditions and existing routes planned by the network of participants, thereby making more informed travel de-cision for each participating user. The premise of this approach is that a route, or plan, for a vehicle is also a prediction of where the car will travel. If routes are created for a sizable percentage of the total vehicle population, an estimate for the overall traffic pattern is attainable. Taking planned routes into account as predictions allows the entire traffic route planning system to better distribute vehicles and minimize traffic congestion. We present an approach that is suitable for realistic, city-scale scenarios, a prototype system to demonstrate feasibility, and experiments using a state-of-the-art microscopic traffic simulator.

Project Website

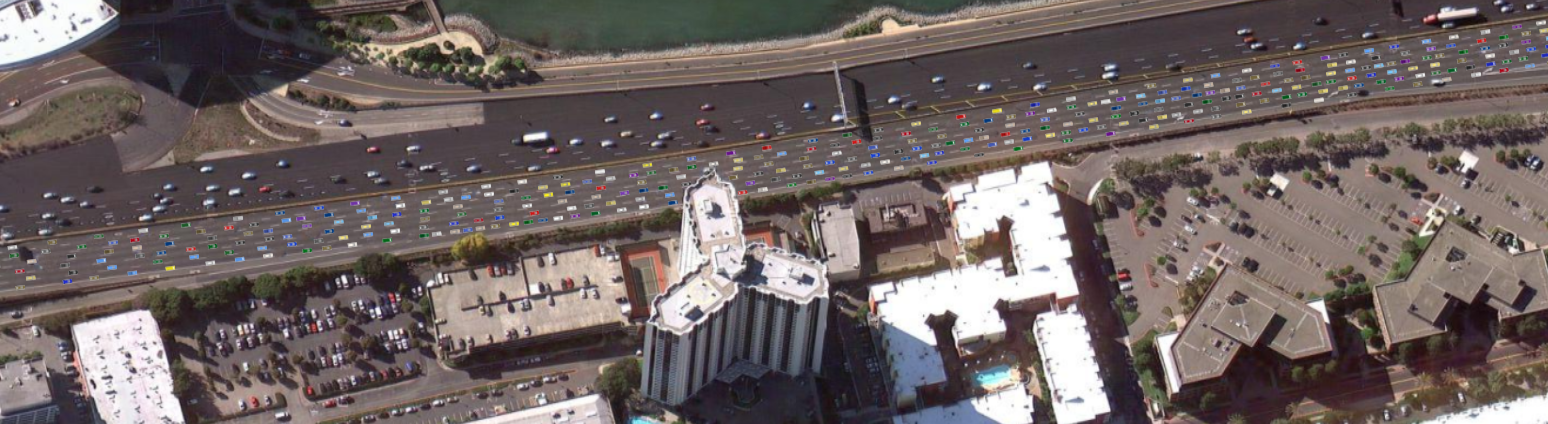

Flow Reconstruction for Data-Driven Traffic Animation

David Wilkie, Jason Sewall, and Ming C. Lin

‘Virtualized traffic’ reconstructs and displays continuous traffic flows from discrete spatio-temporal traffic sensor data or procedurally generated control input to enhance a sense of immersion in a dynamic virtual environment. In this paper, we introduce a fast technique to reconstruct traffic flows from in-road sensor measurements or procedurally generated data for interactive 3D visual applications. Our algorithm estimates the full state of the traffic flow from sparse sensor measurements (or procedural input) using a statistical inference method and a continuum traffic model. This estimated state then drives an agent-based traffic simulator to produce a 3D animation of vehicle traffic that statistically matches the original traffic conditions. Unlike existing traffic simulation and animation techniques, our method produces a full 3D rendering of individual vehicles as part of continuous traffic flows given discrete spatio-temporal sensor measurements. Instead of using a color map to indicate traffic conditions, users could visualize and fly over the reconstructed traffic in real time over a large digital cityscape.

Project Website

Transforming GIS Data into Functional Road Models for Large-Scale Traffic Simulation

David Wilkie, Jason Sewall, and Ming C. Lin

With growing interest in adapting simulation technology for the analysis, planning, and design of new transportation infrastructures and traffic management systems, efficient and accurate creation of 3D models of complex road networks using commonly available GIS data consisting of polylines is needed for realistic, large-scale urban traffic simulation. In this paper, we propose an efficient, automatic method for extrapolating a road map from a GIS database to automatically create a geometrically correct and topologically consistent 3D model of large-scale road network to be readily used in a real-time traffic simulation, interactive visualization of virtual world, and autonomous vehicle navigation. The resulting model representation also provides important road features for traffic simulations, including smoothly connected ramps, highways, overpasses, legal merge zones, and intersections with arbitrary states and is independent of the simulation methodologies. We test the 3D models of road networks generated by our algorithm on real-time traffic simulation using both macroscopic and microscopic techniques.

Project Website

Self-aware Traffic Route Planning

David Wilkie, Jur van den Berg, Ming C. Lin, and Dinesh Manocha

One of the most ubiquitous artificial intelligence (AI) applications is vehicle route planning. While state-of-the-art systems take into account current traffic conditions or historic traffic data, current planning approaches ignore the impact of their own plans on the future traffic conditions. We present a novel algorithm for self-aware route planning that uses the routes it plans for current vehicle traffic to more accurately predict future traffic conditions for subsequent cars. Our planner uses a roadmap with stochastic, time-varying traffic densities that are defined by a combination of historical data and the densities predicted by the planned routes for the cars ahead of the current traffic. We have applied our algorithm to large-scale traffic route planning, and demonstrated that our self-aware route planner can more accurately predict future traffic conditions, which results in a reduction of the travel time for those vehicles that use our algorithm.

Interactive Hybrid Simulation of Large-scale Traffic

Jason Sewall, David Wilkie, and Ming C. Lin

We present a novel, real-time algorithm for modeling large-scale, realistic traffic using a hybrid model of both continuum and agent-based methods for traffic simulation. We simulate individual vehicles in regions of interest using state-of-the-art agent-based models of driver behavior and use a faster continuum model of traffic flow in the remainder of the road network. Our key contributions are efficient techniques for the dynamic coupling of discrete vehicle simulation with aggregate behavior of continuum traffic. We demonstrate the flexibility and scalability of our interactive visual simulation techniques on extensive road networks using both real-world traffic data and synthetic scenarios.

Continuum Traffic Simulation

Jason Sewall, David Wilkie, Paul Merrell, and Ming C. Lin

We present a novel method for the synthesis and animation of realistic traffic flows on large-scale road networks. Our technique is based on a continuum model of traffic flow we extend to correctly handle lane changes and merges, as well as traffic behaviors due to changes in speed limit. We demonstrate how our method can be applied to the animation of many vehicles in a large-scale traffic network at interactive rates and show that our method can simulate believable traffic flows on publicly-available, real-world road data. We furthermore demonstrate the scalability of this technique on many-core systems.

Virtualized Traffic

Jur van den Berg, Jason Sewall, Ming C. Lin, and Dinesh Manocha

We present the concept of virtualized traffic to reconstruct and visualize continuous traffic flows from discrete spatial and temporal data provided by traffic sensors or generated artificially to enhance a sense of immersion in a dynamic virtual world. Our approach can reconstruct the traffic flows in between the two locations along the highway for immersive visualization of virtual cities or other environments. Virtualized traffic is applicable to high-density traffic on highways with an arbitrary number of lanes and takes into account the geometric, kinematic, and dynamic constraints on the cars.

—————————– SOUND —————————-

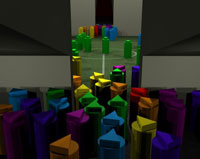

Symphony: Real-time Physically-based Sound Synthesis for Large Scale Environments

Nikunj Raghuvanshi and Ming C. Lin

We present an interactive approach for generating realistic physically-based sounds from rigid-body dynamic simulations. We use spring-mass systems to model each object’s local deformation and vibration, which we demonstrate to be an adequate approximation for capturing physical effects such as magnitude of impact forces, location of impact, and rolling sounds. No assumption is made about the mesh connectivity or topology. Surface meshes used for rigid-body dynamic simulation are utilized for sound simulation without any modifications. We use results in auditory perception and a novel priority-based quality scaling scheme to enable the system to meet variable, stringent time constraints in a real-time application, while ensuring minimal reduction in the perceived sound quality. With this approach, we have observed up to an order of magnitude speed-up compared to an implementation without the acceleration. As a result, we are able to simulate moderately complex simulations with up to hundreds of sounding objects at over 100 frames per second (FPS), making this technique well suited for interactive applications like games and virtual environments. Furthermore, we utilize OpenAL and EAX on Creative Sound Blaster Audigy 2 cards for fast hardware-accelerated propagation modeling of the synthesized sound.

Project Website ![]()

Synthesizing Contact Sounds Between Textured Models

Zhimin Ren, Hengchin (Yero) Yeh, and Ming C. Lin

We present a new interaction handling model for physics-based sound synthesis in virtual environments. A new three-level surface representation for describing object shapes, visible surface bumpiness, and microscopic roughness (e.g. friction) is proposed to model surface contacts at varying resolutions for automatically simulating rich, complex contact sounds. This new model can capture various types of surface interaction, including sliding, rolling, and impact with a combination of three levels of spatial resolutions. We demonstrate our method by synthesizing complex, varying sounds in several interactive scenarios in a game-like virtual environment. The three-level interaction model for sound synthesis enhances the perceived coherence between audio and visual cues in virtual reality applications.

Project Website ![]()

Physics-based Liquid Sound Synthesis

William Moss, Hengchin (Yero) Yeh, Ming C. Lin, and Dinesh Manocha

We present a novel approach for synthesizing liquid sounds directly from visual simulations of fluid dynamics. The sound generated by liquid is mainly due to the vibration of resonating bubbles in the medium. Our approach couples physically-based equations for bubble resonance with a real-time shallow-water fluid simulator as well as an hybrid SPH-grid-based simulator to perform automatic sound synthesis. Our system has been effectively demonstrated on several benchmarks.

Project Website ![]()

Tabletop Ensemble: Touch-Enabled Virtual Percussion Instruments

Zhimin Ren, Ravish Mehra, Jason Coposky, and Ming C. Lin

We present an interactive virtual percussion instrument system, Tabletop Ensemble, that can be used by a group of collaborative users simultaneously to emulate playing music in real world while providing them with flexibility of virtual simulations. An optical multi-touch tabletop serves as the input device. A novel touch handling algorithm for such devices is presented to translate users’ interactions into percussive control signals appropriate for music playing. These signals activate the proposed sound simulation system for generating realistic user-controlled musical sounds. A fast physically-based sound synthesis technique, modal synthesis, is adopted to enable users to directly produce rich, varying musical tones, as they would with the real percussion instruments. In addition, we propose a simple coupling scheme for modulating the synthesized sounds by an accurate numerical acoustic simulator to create believable acoustic effects due to cavity in music instruments. This paradigm allows creating new virtual percussion instruments of various materials, shapes, and sizes with little overhead. We believe such an interactive, multi-modal system would offer capabilities for expressive music playing, rapid prototyping of virtual instruments, and active exploration of sound effects determined by various physical parameters in a classroom, museum, or other educational settings. Virtual xylophones and drums with various physics properties are shown in the presented system.

Project Website

AD-Frustum: Adaptive Frustum Tracing for Interactive Sound Propagation

Anish Chandak, Christian Lauterbach, Micah Taylor, Zhimin Ren, and Dinesh Manocha

We present an interactive algorithm to compute sound propagation paths for transmission, specular refection and edge diffraction in complex scenes. Our formulation uses an adaptive frustum representation that is automatically sub-divided to accurately compute intersections with the scene primitives. We describe a simple and fast algorithm to approximate the visible surface for each frustum and generate new frusta based on specular refection and edge diffraction. Our approach is applicable to all triangulated models and we demonstrate its performance on architectural and outdoor models with tens or hundreds of thousands of triangles and moving objects. In practice, our algorithm can perform geometric sound propagation in complex scenes at 4-20 frames per second on a multi-core PC.

Paper (IEEE Visualization, 2008) ![]()

Interactive Sound Propagation in Dynamic Scenes Using Frustum Tracing

Christian Lauterbach, Anish Chandak, Micah Taylor, Zhimin Ren, and Dinesh Manocha

We present a new approach for simulating real-time sound propagation in complex, virtual scenes with dynamic sources and objects. Our approach combines the efficiency of interactive ray tracing with the accuracy of tracing a volumetric representation. We use a four-sided convex frustum and perform clipping and intersection tests using ray packet tracing. A simple and efficient formulation is used to compute secondary frusta and perform hierarchical traversal. We demonstrate the performance of our algorithm in an interactive system for game-like environments and architectural models with tens or hundreds of thousands of triangles. Our algorithm can simulate and render sounds at interactive rates on a high-end PC.

Project Website

Interactive Edge-diffraction for Sound Propagation in Complex Virtual Environments

Micah Taylor, Anish Chandak, Zhimin Ren, Christian Lauterbach, and Dinesh Manocha

We present an algorithm for interactive computation of diffraction paths for geometric-acoustics in complex environments. Our method extends ray-frustum tracing to efficiently compute volumetric regions of the sound field caused by long diffracting edges. We compute accurate diffraction paths from each source to the listener and based on the Uniform Theory of Diffraction, attenuate the input audio. The overall approach is general, can handle dynamic scenes, and can be used to compute diffraction and specular reflection paths with relatively little aliasing. We evaluate the accuracy through comparisons with physically validated geometric simulations. In practice, our edge diffraction algorithm can perform sound propagation at interactive rates in dynamic scenarios on a multi-core PC.

Project Website

An Efficient Time-domain Solver for the Acoustic Wave Equation on Graphics Processors

Ravish Mehra, Nikunj Raghuvanshi, Lauri Savioja, Ming C. Lin, and Dinesh Manocha

We present an efficient algorithm for time-domain solution of the wave equation for the purpose of room acoustics. The approach assumes that the speed of sound is spatially invariant. Numerical dispersion errors are controlled by computing an adaptive rectangular decomposition of the environment and using analytical solutions within the rectangular partitions based on the Discrete Cosine Transform. Sixth-order finite-difference transmission operators are used to model propagation across partition interfaces. A novel mapping of the complete solver to graphics processors is presented. It is demonstrated that by carefully mapping all components of the algorithm to match the parallel processing capabilities of graphics processors, significant improvement in performance is gained compared to a CPU-based implementation. Due to the near absence of numerical dispersion, this technique is suitable for both, computing impulse responses for auralization, as well as producing animated sound field visualizations. As a result of using graphics processors, a 1 second long simulation can be performed on a scene of air volume 7500 cubic meters till 1650 Hz in 18 minutes on a desktop computer. The performance of the algorithm is tested for many complex-shaped 3D spaces. The use of graphics processor results in a 25-fold improvement in computation time over a single-threaded CPU-based implementation, while producing numerically identical results. Also, substantial performance gain over a high-order finite-difference time-domain method is observed.To the best of the authors’ knowledge, this is the fastest time-domain solver for the wave equation for modeling room acoustics of large, complex-shaped 3D scenes that generates broad-band results for auralization as well as visualization purposes.

Project Website

Fast and Accurate Specular Reflection Computation using Visibility Culling

Anish Chandak, Lakulish Antani, Micah Taylor, and Dinesh Manocha

We present an efficient technique to compute the potentially visible set (PVS) of triangles in a complex 3D scene from a viewpoint. The algorithm computes a conservative PVS at object space accuracy. Our approach traces a high number of small, volumetric frusta and computes blockers for each frustum using simple intersection tests. In practice, the algorithm can compute the PVS of CAD and scanned models composed of millions of triangles at interactive rates on a multi-core PC.We also use the visibility algorithm to accurately compute the reflection paths from a point sound source. The resulting sound propagation algorithm is 10-20X faster than prior accurate geometric acoustic methods.e present a fast algorithm to perform sound propagation in complex 3D scenes. Our approach computes propagation paths from each source to the listener by taking into account specular reflections and higher-order edge diffractions around finite edges in the scene. We use the well known Biot-Tolstoy-Medwin diffraction model along with efficient algorithms for region-based visibility to cull away primitives and significantly reduce the number of edge pairs that need to be processed. The performance of region-based visibility computation is improved by using a fast occluder selection algorithm that can combine small, connected triangles to form large occluders and perform conservative computations at object-space precision. We show that our approach is able to reduce the number of visible primitives considered for sound propagation by a factor of 2 to 4 for second order edge diffraction as compared to prior propagation algorithms. We demonstrate and analyze its performance on multiple benchmarks.

Project Website

Fast Geometric Sound Propagation with Finite Edge Diffraction

Lakulish Antani, Anish Chandak, Micah Taylor, and Dinesh Manocha

We present a fast algorithm to perform sound propagation in complex 3D scenes. Our approach computes propagation paths from each source to the listener by taking into account specular reflections and higher-order edge diffractions around finite edges in the scene. We use the well known Biot-Tolstoy-Medwin diffraction model along with efficient algorithms for region-based visibility to cull away primitives and significantly reduce the number of edge pairs that need to be processed. The performance of region-based visibility computation is improved by using a fast occluder selection algorithm that can combine small, connected triangles to form large occluders and perform conservative computations at object-space precision. We show that our approach is able to reduce the number of visible primitives considered for sound propagation by a factor of 2 to 4 for second order edge diffraction as compared to prior propagation algorithms. We demonstrate and analyze its performance on multiple benchmarks.

Project Website

RESound: Interactive Sound Rendering for Dynamic Virtual Environments

Micah Taylor, Anish Chandak, Lakulish Antani, and Dinesh Manocha

We present an interactive algorithm and system (RESound) for sound propagation and rendering in virtual environments and media applications. RESound uses geometric propagation techniques for fast computation of propagation paths from a source to a listener and takes into account specular reflections, diffuse reflections, and edge diffraction. In order to perform fast path computation, we use a unified ray-based representation to efficiently trace discrete rays as well as volumetric ray-frusta. RESound further improves sound quality by using statistical reverberation estimation techniques. We also present an interactive audio rendering algorithm to generate spatialized audio signals. The overall approach can handle dynamic scenes with no restrictions on source, listener, or obstacle motion. Moreover, our algorithm is relatively easy to parallelize on multi-core systems. We demonstrate its performance on complex game-like and architectural environments.

Project Website ![]()

iSound: Interactive GPU-based Sound Auralization in Dynamic Scenes

Micah Taylor, Anish Chandak, Qi Mo, Christian Lauterbach, Carl Schissler, and Dinesh Manocha

We present an auralization algorithm for interactive virtual environments with dynamic sources and objects. Our approach uses a modified image source method that computes propagation paths combining direct transmission, specular reflections and edge diffractions up to a given order. We use a novel GPU-based multi-view raycasting algorithm for parallel computation of image sources along with a simple resampling scheme to reduce visibility errors. In order to reduce the artifacts in audio rendering of dynamic scenes, we use a higher order interpolation scheme that takes into account attenuation, cross-fading and delay. The resulting system can perform perform auralization at interactive rates on a high-end PC with NVIDIA GTX 280 GPU with 2-3 orders of reflections and diffraction. Overall, our approach can generate plausible sound rendering for game-like scenes with tens of thousands of triangles. We observe more than an order of magnitude improvement in computing propagation paths over prior techniques.

Project Website ![]()

GSound: Interactive Sound Propagation and Rendering for Games

Carl Schissler and Dinesh Manocha